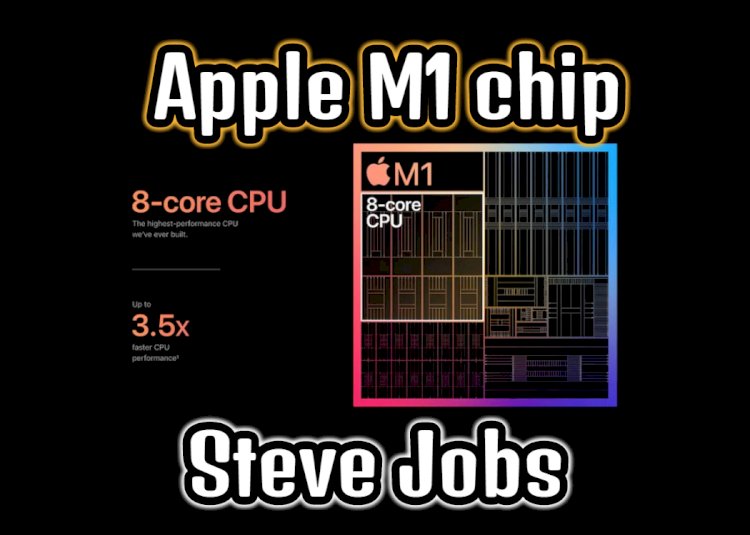

Apple M1 chip, Steve Jobs's final killer

Apple's M1 chip has been released for some time. Numerous articles have interpreted the significance of this chip with 16 billion transistors. However, the most thorough one is perhaps this article...

Editor's note: Apple's M1 chip has been released for some time. Numerous articles have interpreted the significance of this chip with 16 billion transistors. However, the most thorough one is perhaps this article by former technology media editor Om Malik. He believes that as the last piece of the puzzle in Jobs' vision, M1 is not only of great significance to Apple, but will also define the future of computing. The original text was published on his personal blog with the title: Steve Jobs's last gambit: Apple's M1 Chip

Focus on:

The environment is changing, the computer is changing

We need computers to be able to handle many tasks and be as fast as the wind. The point is not performance, but function

Jobs' final trick is to challenge the classic concept of computers, and M1 is Apple's latest move.

M1 integrates many technologies including CPU, GPU, memory, machine learning, etc. into a chip

The lesson from Jobs's painstaking efforts: Don't rely on third parties to become the promoters of your key innovations and capabilities

Software = data + API, chip integration and specialization, computing beyond text interface, machine learning defines the future of software

The transfer to the M family takes 2 years

Although Apple's last press conference in 2020 has slowly become a small spot in the rearview mirror, I still can't stop thinking about the M1 chip that debuted. In terms of technology and its impact on society, I am an optimist from the bottom of my heart. My excitement about the new Apple products is not limited to this chip, or a computer, or this company. In fact, this is related to the problem of continuing to shift (or even accelerate) toward the next stage of computing.

The history of traditional desktop-centric computing thinking is much earlier than what we thought in the era of smart phones should be many things: uninterrupted connection, the environmental intelligence of software systems, and the increasing dependence of daily activities on computing resources Wait. Today’s computers are changing. They are servers sitting in the cloud, laptops in your bags, and smart phones in your pockets. It is equivalent to the capacity of a desktop computer only 5 years ago, and now it can be installed with only a keyboard and a Raspberry Pi that costs only $50. Cars and TVs are computers just like everything else.

In such an environment, we need our computers to be able to handle many tasks and be as fast as the wind. The focus is not performance, but functionality. Including Intel, AMD, Samsung, Qualcomm and Huawei, everyone is moving towards this future. But Apple's move is more deliberate, more comprehensive, and bolder.

Jobs' final trick is to challenge the classic concept of computers, and M1 is Apple's latest move. This new chip will first be deployed on the low-end versions of MacBook Air, Mac mini and 13-inch MacBook Pro (I have been using it for the last three days). To better understand Apple’s plans, I recently spoke with three of their executives: Greg "Joz" Joswiak, senior vice president of global marketing, Johny Srouji, senior vice president of hardware technology, and Craig, senior vice president of software engineering Federighi.

This conversation shows the future, and not just Apple's future.

What is M1?

However, let us first look at what M1 is.

Traditionally, computers are based on independent chips. As a system-on-chip (SoC), M1 integrates many technologies-such as central processing unit (CPU), graphics processing unit (GPU), memory, and machine learning-into an integrated circuit on a chip. Specifically, M1 includes the following things:

-

An 8-core CPU consisting of four high-performance cores and four high-performance cores

-

An 8-core integrated GPU

-

A 16-core Apple Neural Engine (Apple Neural Engine).

-

Manufactured using the most advanced 5nm process technology.

-

Packed 16 billion transistors into a chip.

-

Apple's latest image signal processor (ISP) can provide higher quality video

-

Secure Enclave (can handle security affairs like Touch ID authentication)

-

The Thunderbolt controller designed by Apple supports USB 4 with a transmission rate of up to 40Gbps.

Apple claimed in a press release, "Compared with the previous generation of Macs, M1 improves CPU performance by 3.5 times, GPU performance by 6 times, machine learning speed by 15 times, and battery life by 2 times. . "

The contrast between this pride and Apple's positioning in the heyday of the personal computer couldn't be more obvious. At that time, the symbiotic relationship of WinTel (Intel and Microsoft) was dominant, and Apple, which was made by its financially and technically inferior partner IBM Motorola, was marginalized. In the bleak outlook, Apple has no choice but to switch to Intel processors. Then slowly, they started to eat away at the market share bit by bit.

To remain competitive, Apple must manufacture and control everything. Jobs has suffered a lot to understand this: software, hardware, user experience, and the chips that provide powerful power for it, all of which must be dragged on itself. Hands . He called this "all of electronic products". I have written before that today's giants have a critical need for vertical integration. The sentence in a 2017 article can basically be summed up: " Don't rely on third parties to be the promoters of your key innovations and capabilities ."

For Apple, the iPhone represents an opportunity to start again. Their new journey started with the A series of chips, which were the first self-developed chips to enter the iPhone 4 and the first-generation iPad. In the following years, this chip became more and more powerful, smarter, and able to perform complex tasks. Although it has become a behemoth in terms of capacity, the demand for power is still moderate. This balance of performance and muscle makes the A-series chips a game changer. The latest version of this chip, A14 Bionic, iPhone and now iPad is the latest generation of power sources spring.

Apple products are increasingly being supported by its own growing army of chip magicians. But there is an abrupt exception: the Mac that made Apple's fortune.

Then M1 turned out

Joswiak said: "Steve once said that the entire electronic product must be made by us. From the iPhone to the iPad to the Watch, all of our products are made by ourselves. This (M1) is the last one made by the Mac itself. element."

Why is M1 important

Modern computing is changing. The software is the end point of the data, and the work is realized by the application programming interface (API).

Chips have become so complex that they require integration and specialization to control power consumption and achieve better performance.

Through the joint efforts of the chip, hardware, and software teams, Apple has defined a future system that closely integrates them.

Computing in the future has crossed text interfaces: visual and auditory interfaces are the key.

Machine learning will define future software capabilities.

It is very similar to the Apple chips in the iPhone and iPad, but with more powerful functions. It utilizes Apple's Unified Memory Architecture (UMA), which means that all memory (DRAM) and various components that need to access memory (such as CPU, GPU, image processor, and neural engine) are all on the same chip . As a result, the entire chip can access data, without duplicating data between different components, and without interconnection. The access to memory has extremely low latency and extremely high bandwidth. The result is lower power consumption and better performance.

With this new technology, everything from video conferencing services, games, image processing to web surfing should become easier. According to my experience, at least so far, it is true. I have been using a 13-inch M1 Macbook Pro with 8GB of RAM and 256GB of storage space. Web pages load quickly on Safari, and most of the apps optimized for M1 (called "universal apps" by Apple) also run fast. I haven't played the machine for too long, but the initial impression is quite satisfactory.

Some analysts are very optimistic about Apple's prospects. Richard Kramer of Arete Research pointed out in a note to customers that, as the world's first 5-nanometer process chip, M1 is one generation ahead of its x86 competitors. Kramer pointed out, "Apple manufactures the world's leading specifications relative to x86, and the chip price is less than half of the 150-200 US dollars that PC OEMs ask for, and Apple's unified memory architecture (UMA) allows M1 to use less DRAM. And NAND.” He believes that Apple will launch two new chips next year, both of which should be aimed at high-end machines, one of which will be aimed at iMac.

I don't think AMD and Intel will be Apple's competitors. We should regard Qualcomm as the next important notebook chip supplier. Apple's M1 will arouse competitors' interest in its new architecture.

Integrate into a chip, maximize throughput, quickly access memory, optimize computing performance based on tasks, and adapt machine learning algorithms. This approach belongs to the future-this is not only applicable to mobile chips, but also to desktops and laptops. This is a huge change for both Apple and the entire PC industry.

The transition will not happen overnight

The news that the M1 is focused on low-end machines has made some people talk. However, according to Morgan Stanley's research, shipments of these three machines accounted for 91% of Mac shipments in the past 12 months.

Federighi told me: “Those who don’t buy this part of our product line now seem to want to wait for us to develop chips to upgrade their most enthusiastic (our product line) other product series. You know theirs. One day will come. But for now, from every aspect I can consider, the system we are developing is better than the one we replaced."

Migrating to the M family will take up to two years . What we see now may be the first of many derivatives of this chip, and there will be many later appearing in different types of Apple computers.

For Apple, this is a huge change, full of risks along the way. This means letting their entire community switch from the x86 platform to the new chip architecture. While maintaining backward compatibility, a whole generation of software is required to support the new chip. Joswiak warned: "This will take several years, because this transition will never happen overnight. But we have been very successful in achieving such major changes in the past."

The most significant change in the past occurred in 2005. Hindered by the increasing decline of the Power PC ecosystem, the company made a difficult decision to switch to the excellent Intel ecosystem. Accompanied by the transition to the x86 architecture, it also includes the birth of the new operating system Mac OSX. This change caused tremendous damage to both developers and end customers.

Although it has encountered some turbulence, fortunately Apple still has a major asset: that is Steve Jobs. He allows everyone to focus on the construction of a powerful, robust and competitive platform. A platform that can match WinTel will bring greater returns. He is right.

"We are developing a custom chip that perfectly fits the product and how the software uses it."

——Johny Srouji, Senior Vice President of Hardware Technology

I transitioned from an old Mac to an OS-X-based computer. After years of frustration about working with a computer with insufficient performance, I finally started to enjoy the Mac experience. I am not alone at this point. This move helped Apple maintain its position, especially in the community of developers and creatives. Eventually, the public has become part of the Apple ecosystem, mainly because of the iPod and iPhone.

Apple CEO Tim Cook pointed out in a recent keynote speech that half of the new computers Apple sold were purchased by new Mac buyers . Last quarter, the Mac business grew by nearly 30%, and this year is the best year in Mac history. Apple sold more than 5.5 million Macs in 2020 and currently has a market share of 7.7%. In fact, many of these purchasers may not know or care about which chip is running in the computer.

However, for those who care about this, many of them have become accustomed to the billion-dollar marketing budgets of Intel and Windows PC makers, thinking about gigahertz, memory, and speed. In modern thinking about laptops and desktops, the idea that larger numbers means better quality has been deeply rooted . This mode of thinking will pose a huge challenge to Apple.

But Intel and AMD have to talk about gigahertz and power, because they are parts providers and can only charge higher fees by providing higher specifications. Srouji prides itself: "We are a product company. We have made exquisite products that closely integrate software and hardware. This has nothing to do with gigahertz and megahertz, but only about what customers can get from them."

Johny Srouji, Apple's senior vice president of hardware technology.

Srouji has previously worked at IBM and Intel, is a veteran in the chip industry, and now leads Apple's huge silicon business unit. As he saw it, just as no one cares about the clock speed of the chip in the iPhone, so everyone will do the same with the new Mac in the future. Instead, everyone is concerned about " how many tasks can be completed within a battery life cycle ." Srouji said that M1 is not a general-purpose chip, but "for the best use of our products, and tightly integrated with the software."

He said: "I believe that Apple's model is the best and unique model. We are developing a custom chip that perfectly fits the product and how the software uses it. When we design the chip (about 3 or 4 years) Front), Craig and I are sitting together, we determine what products to deliver, and then we work together. Intel or AMD or any other company can’t do this."

According to Federighi, integration and these specialized execution engines have become a long-term trend. "It's difficult for you to put more transistors on a piece of silicon. It has become more and more to integrate more of these components tightly together, and develop dedicated chips, and then solve specific problems in the system. Important.” M1 uses 16 billion transistors. Each chip of its notebook competitors AMD (Zen 3 APU) and Intel (Tiger Lake) is about 10 billion transistors.

When talking about the symbiotic relationship between Apple’s hardware and software, Federighi said: “Together define the appropriate chip to make the computer we want, and then build this chip on a large scale. The significance of being in this position is very far-reaching. "Both teams are working hard to look forward to the next three years, wanting to see what the future system will look like. Then, they will develop software and hardware for that future.

Craig Federighi, senior vice president of software engineering at Apple

Redefining performance

The M1 chip cannot be taken out alone. It is the embodiment of what is happening in the entire computing field (especially the software layer) at the silicon level. In large part because of mobile devices that have to be kept connected all the time, computers must now also be turned on instantaneously so that users can see, interact, and stay away from them. These devices have short delays and are highly efficient, with more emphasis on privacy and data protection. They must not have fans, must not be hot, must not make noises, and must not be without electricity. This is everyone's common expectation, therefore, software must also keep pace with the times.

The desktop environment is the last territory to be captured. One of the decisive features of traditional desktop computing is the file system. All software shares the same storage area, and users will work hard to keep it organized (for better or worse). This method works well in a world where software and its functions operate on an individual's scale. But we now live in a connected world, moving at the scale of the network. This new computing reality requires modern software , the kind of software we see and use on our phones every day. Although these changes will not happen tomorrow, the snowball has already rolled down the mountain.

The traditional model is that the application or program is placed on the hard drive and run when the user wants to use it. But we are moving towards a new model in which applications will have many entry points. They will provide data for use elsewhere and anywhere. They respond to notifications and events about what the user is doing or where they are located .

Modern software has many entry points. If you look at the latest mobile OS changes, you will see the emergence of new methods like App Clip and Widgets. They will slowly reshape our perceptions and expectations of applications. These trends indicate that the application is a two-way endpoint—an application programming interface—that responds to data in real time. Today, our applications will become more personalized and intelligent as we use them. Our interaction defines their capabilities. The application is always learning.

As Apple merges desktop, tablet, and mobile operating systems into another layer supported by a single chip architecture, across the entire product line, traditional performance metrics will no longer be effective.

Federighi said: "For a long time, the parameters commonly used in the industry are no longer good indicators of task-level performance ." You don't need to care about CPU specifications anymore. Instead, you will consider the situation of the task. "In terms of architecture, how many 4k or 8k video streams can you support at the same time when processing specific special effects? Video professionals want to hear the answer to this question. No chip specifications can answer this question for them. "

Srouji pointed out that although this new chip is optimized for compactness and performance, it can still achieve more functions than traditional implementations . Take the GPU for example. The most critical transformation in computing is the shift from text-based computing to visual-centric computing. Whether it is Zoom video conferencing, watching Netflix, editing photos, or video clips, video and image processing have become an indispensable part of our computing experience. This is why GPUs have become an essential part of computers like other chips. For example, Intel’s chip provides integrated graphics, but it is still inferior to discrete graphics because it must use the PCIe interface to interact with the rest of the machine.

By developing a higher-end integrated graphics engine and incorporating a faster and more powerful general-purpose memory architecture, the functions of the Apple M1 even surpass that of a computer with an independent GPU chip, because the dedicated memory of the latter is separated from the conventional memory inside the computer of.

Why is this important?

Modern graphics cards no longer render triangles on the chip. Rather, it is a place where complex interactions occur between various parts of the computer. Data needs to be quickly split between video decoders, image signal processors, rendering, calculations, and rasterization. This means that there will be a lot of data movement.

Federighi pointed out: "If it is a discrete GPU, you have to move data back and forth between the system buses. This starts to dominate the performance." The reason why you see the computer heat up, the fan turns like a turbocharger , And the need for larger memory and more powerful chips, this is the reason. (At least in theory) M1 uses UMA to eliminate all the need to move data back and forth. Most importantly, Apple provides a new method of optimizing rendering, which involves rendering multiple tiles in parallel, and allows the company to eliminate the complexity associated with the video system.

Srouji said: "A long time ago, most processing was done on the CPU. Now, a lot of processing is done on the CPU, graphics and neural engines, and image signal processors ."

The situation will only continue to change. For example, machine learning will play a more important role in our future, which is why neural engines need to develop and keep pace. Apple has its own algorithms, so it needs to develop its own hardware to keep up with these algorithms.

Similarly, the voice interface will also become a major part of our computing field. Chips like M1 allow Apple to use its hardware capabilities to overcome many of the limitations of Siri and reach a position on par with Amazon's Alexa and Google Home. I noticed that Siri seems to be more accurate on the M1-based machine I have.

From a human perspective, all of this means that you can see the system as soon as you turn on the screen. When making a zoom video call, your computer will not burn your knee. When you are talking to mom, the battery will not run out.

It's amazing that these seemingly small changes that most of us don't even realize can change our lives.

What's Your Reaction?